Who Gets to Define What’s “Reliable” on Wikipedia?

Wikipedia is once again a target in the culture wars, accused of bias for enforcing its reliability standards. Meanwhile, Wikimedia is introducing new measures to protect editors from harassment.

Deprecated sources icon CC BY-SA 3.0 from Wikipedia

📰 In the News

Wikipedia, Bias, and the Battle Over Reality

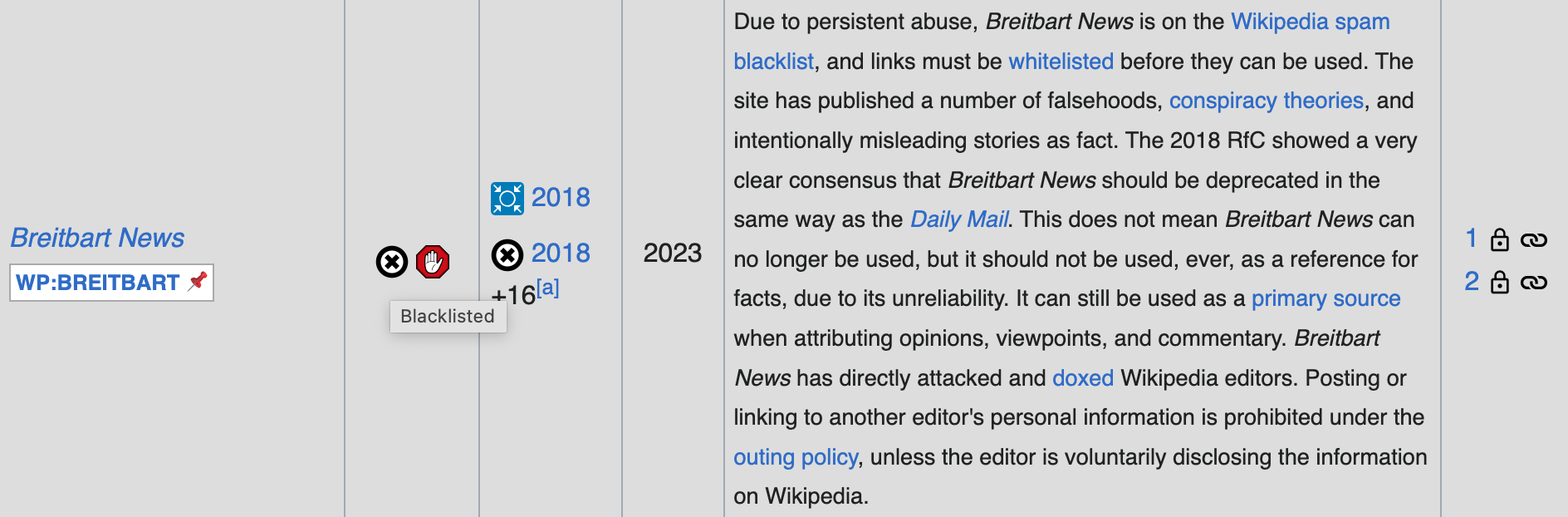

Much as WikiWise would prefer to lead with another topic, Wikipedia’s role in today’s political polarization remains a major story. We’ve covered criticisms from Elon Musk, the Manhattan Institute, and a more extreme plan from The Heritage Foundation. This week, a new critique comes from the Media Research Center (MRC), accusing Wikipedia of “blacklisting” conservative media.

Screenshot CC 4.0-BY-SA from Wikipedia

Like the Manhattan Institute’s study, MRC’s report lacks depth. It condemns Breitbart News’ restriction but includes a screenshot explaining why: beyond spreading falsehoods and conspiracies, Breitbart has doxxed Wikipedia editors and repeatedly spammed the site.

Still, the report gained traction. The New York Post editorial board called for “Big Tech to block Wikipedia” without saying what that means. The Times of London, a conservative-leaning newspaper, simply noted that its own publications are considered “generally reliable” by Wikipedia. Meanwhile, Columbia Journalism Review, Slate, and The Atlantic—all considered reliable by Wikipedia but dismissed as left-wing by MRC—pushed back. As The Atlantic’s Lila Shroff put it, Wikipedia’s status as a “last bastion of shared reality” is “precisely why it’s under attack”.

The key takeaway for PR and business professionals: Wikipedia’s reliability standards are driven by editorial consensus, not politics. Businesses looking to improve their presence should focus on meeting those standards—using high-quality, independent sources—rather than lobbying for Wikipedia to change its standards. While Wikipedia isn’t immune to controversy, an evidence-based approach remains the best path to fair representation.

🔔 Wiki Briefing

Life During Information Wartime

As attacks on Wikipedia escalate, the Wikimedia Foundation (WMF) is rolling out new measures to protect editors from harassment and legal threats, as reported by 404 Media’s Jason Koebler. One major initiative, in fact long in development, is the “temporary accounts program”, which will assign anonymous editors a temporary username instead of exposing their IP addresses to prevent harassment and legal threats. Some non-English Wikipedia projects already allow registered sock accounts for controversial topics, and there is speculation about similar measures for English Wikipedia.

Screenshot of the “Not for sale” Tweet by Jimmy Wales

Wikimedia is also bolstering legal protections. The Foundation has launched a legal defense program to support editors facing lawsuits related to their Wikipedia contributions, provided they were acting in good faith. It is worth noting, whether WMF has done right by editors under legal threat in India is a matter of current dispute.

One reason for confidence is Wikipedia’s decentralized governance, which has long made it resilient to outside pressure. These new protections reinforce that strength. Another reason for optimism: as Jimmy Wales has said, Elon Musk can’t buy it.

📚 Research Report

From Russia With Edits

A new tool called INFOGAP, developed by researchers including a Johns Hopkins computer scientist, uses AI to detect cultural biases in Wikipedia’s multilingual content. Analyzing 2,700 biographies from English, Russian, and French Wikipedia, the study found that Russian entries omitted 77% of content from English versions, with LGBT figures disproportionately affected. Researchers suggest INFOGAP could also be applied beyond Wikipedia to study media and political discourse across cultures.

🧩 Wikipedia Facts

According to Wikipedia’s own traffic statistics, the English Wikipedia received more than 4,000 page views per second in 2024.

💡 Tips & Tricks

Finally, a productive time-waster: WikiTok lets you scroll endlessly through random Wikipedia article snippets, turning knowledge into a TikTok-style feed. Built by web developer Isaac Gemal after seeing the idea suggested as a joke, the site ditches algorithms for pure randomness. And he didn’t waste much time—the whole thing took Gemal just two hours.